So, this is the edge?

Or, is it the edge?

It’s hard to know.

Like the event horizon of a massive black hole,

it bends a little more steeply at every step;

but never so you would notice.

It’s as if I know I’m being swept away,

but as anything caught in the current,

I feel no movement…

just a persistent screaming in the back of my mind –

an itchy thought –

a superposition of sheer panic, and dead calm.

Like camping on the boundary

between the immovable object

and the irresistible force –

carefully balanced so that time stands still;

with unimaginable energy, poised

just a twitch away from cataclysm.

I stare into the abyss, and perhapse, later,

if not otherwise occupied,

I will sit in the dark and sink slowly into madness.

I wish I had the magic words

to bring you safely back (to me)

to feel your touch

to hear your voice

and hold you by my side

I watch your likeness on machines

hear your vibrations in my ears

you’re far away

but oh so close

a sparkle in my mind

I wish I had the magic words

but somehow they won’t come (to me)

still I can feel

your memory

stirring deep inside

Impulses and waves, electricity,

invisible forces, flow in between

I don’t recall imagining

a world unreal as this

Reach out to me from where you are

as I reach out from here (to you)

imagine it’s

my warm embrace

and don’t let it subside

I wish I had the magic words

to bring you safely back (to me)

to feel your touch

to hear your voice

and hold you by my side

It seemed obvious enough. I mean, I’ve been using these for as long as I can remember, but the other day I used the term SOP and somebody said “what’s that?”

An SOP, or “Standard Operating Procedure” is a collaborative tool I use in my organizations to build intellectual equity. Intellectual equity is what you get when you capture domain knowledge from wetware and make it persistent.

In order to better explain this, and make it more obvious and shareable, I offer you this SOP on SOPs. Enjoy!

This is an SOP about making SOPs.

It describes what an SOP looks like by example.

SOPs are files describing Standard Operating Procedures.

In general, the first thing in an SOP is a description of the SOP

including some text to make it easy to find - kind of like this one.

[ ] Create an SOP

An SOP is a kind of check list. It should be just detailed enough so

that a reasonably competent individual for a given task can use the

SOP to do the task most efficiently without missing any important

steps.

[ ] Store an SOP as a plain text file for use on any platform.

[ ] Be mindful of security concerns.

[ ] Try to make SOPs generic without making them obscure.

[ ] Be sure they are stored and accessed appropriately.

[ ] Some SOPs may not be appropriate for some groups.

[ ] Some SOPs might necessarily contain sensitive information.

[ ] Use square brackets to describe each step.

[ ] Indent to describe more specific (sub) steps such as below...

[ ] View thefile from the command line

[ ] cat theFile

Note: Sometimes you might want to make a note.

And, sometimes a step might be an example of what to type or

some other instruction to manipulate an interface. As long as

it's clear to the intended reader it's good but keep in mind

that this is a check list so each instruction should be

a single step or should be broken down into smaller pieces.

[ ] When leaving an indented step, skip a line for clarity.

< > If a step is conditional.

[ ] use angle brackets and list the condition.

[ ] Use parentheses (round brackets) to indicate exclusive options.

Note: Parentheses are reminiscent of radio buttons.

( ) You can do this thing or

( ) You can do this other thing or

( ) You can choose this third thing but only one at this level.

[ ] Use an SOP (best practice)

[ ] Make a copy of the SOP.

[ ] Save the copy with a unique name that fits with your workflow

[ ] Be consistent

[ ] Mark up the copy as you go through the process.

[ ] Check off each step as you proceed.

Note: This helps you (or someone) to know where you are in the

process if you get interrupted. That never happens right?!

[ ] Brackets with a space are not done yet.

[ ] Use a * to mark an unfinished step you are working on.

[ ] Use an x to mark a step that is completed.

[ ] Use a - to indicate a step you are intentionaly skipping.

[ ] Add notes when you do something unexpected.

< > If skipping a step - why did you skip it.

< > If doing steps out of order.

< > If you run into a problem and have to work around it.

< > If you think the SOP should be changed, why?

< > If you use different marks explain them at the top.

[ ] Make a legend for your marks.

[ ] Collaborate with the team so everybody will understand.

[ ] Add notes to include any important specific data.

[ ] User accounts or equipment references.

[ ] Parameters about this specific case.

[ ] Basically, any variable that "plugs in" to the process.

[ ] BE CAUTIOUS of anything that might be secure data.

[ ] Avoid putting passwords into SOPs.

[ ] Be sure they are stored and shared appropriately.

[ ] Some SOPs may require more security than others.

[ ] Some SOPs may be relevant only to special groups.

[ ] Use SOPs to capture intellectual equity!

[ ] Use SOPs for onboarding and other training.

[ ] Update your collection of SOPs over time as you learn.

[ ] Template/Master SOPs describe how things should be done.

[ ] Add SOPs when you learn something new.

[ ] Modify SOPs when you find a better process.

[ ] Delete SOPs when redundant, useless, or otherwise replaced.

[ ] Completed SOPs are a great resource.

[ ] Use them for after action reports.

[ ] Use them for research.

[ ] Use them for auditing.

[ ] Use them to track performance.

[ ] Use them as training examples.

[ ] Collaborate with team mates on any changes. M2B!

[ ] Referring to SOPs is a great way to discuss changes.

[ ] Referring SOPs keeps teams "on the same page."

[ ] Referring SOPs helps to develop a common language.

[ ] Create SOPs as a planning tool - then work the plan.

[ ] Keep SOPs accessible in some central place.

[ ] GIT is a great way to keep, share, and maintain SOPs.

[ ] An easily searchable repository is great (like GitTea?!)

[ ] Be mindful of security concerns.

happy thoughts please

push away the darkening gloom

that threatens to consume

the room between my ears

by stoking all my fears

reminding me of tears

the years that went by wrong

the promises broken

the dreams undone

happy thoughts please

wash off the pain

remove the stains

repair the wonds

evict the gloom

before it turns the light to pain

the morning sun to a cold harsh intruder

painful brutal beastly heartless and unrelenting

happy thoughts

please

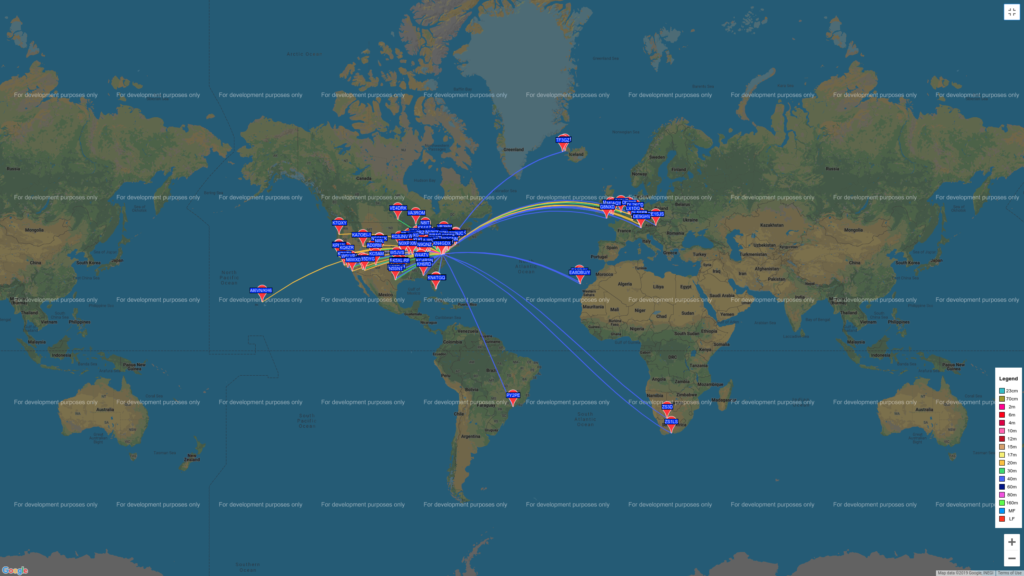

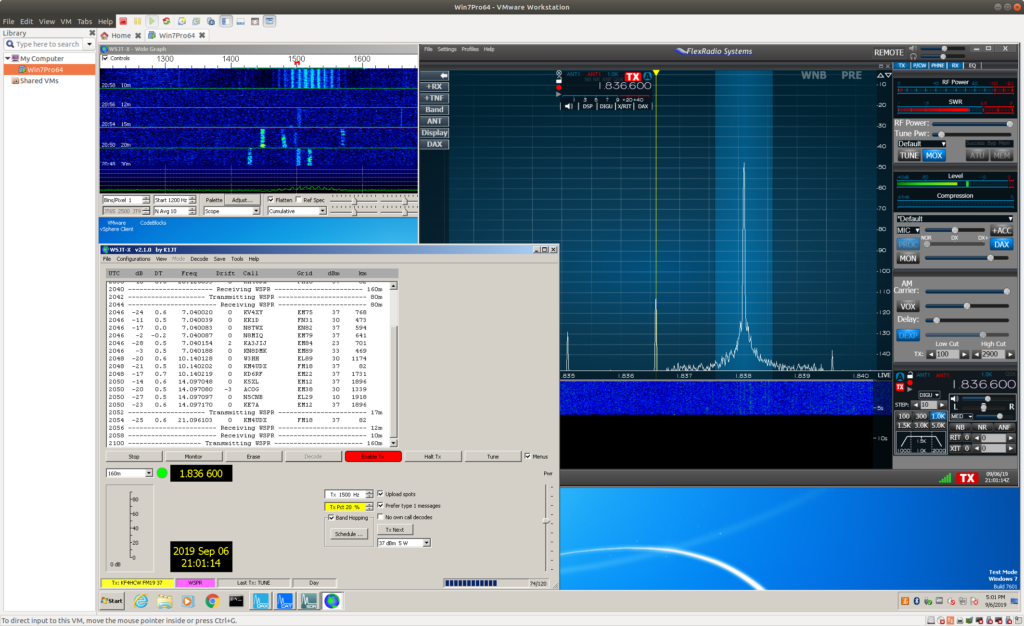

I should have done this forever ago… but I’ve decided to put the brick (flex6300) to work when I’m not using it 🙂 From now on, mostly, when I’m not using it for something else or whenever it won’t interfere with other research I’ll be running WSPR on a VM using the 6300 and my large vertical loop.

He’s seen a hundred come before

They’d rush ahead although forewarned

…and in their haste – get their “reward”

Another verse if you please…

He raised his voice to spread the lore

The signs he posts still go ignored

… as they scurry past the scattered gore

Another verse if you please…

Another verse if you please…

He settles back, can’t bear to watch

He shakes his head, and checks his watch

… and bides his time,

it ticks and talks,

then there’s the cRACk

that tells the tale,

A flash of red,

A tuft of fir,

A FLURRY OF UNBRIDLED MADNESS AND VIOLENCE AND SHIVERS RUN UP HIS SPINE SHAKING HIM TO HIS CORE CRUMBLING HIM TO THE GROUND IN HORROR, and, sorrow, and, loneliness, and despair…

The 2nd mouse gets the cheese.

The 2nd mouse gets the cheese.

Nowhere

Nothing

Zero

Zilch

A quantum foam

Seithing with fire

The emptiness burns

A glassy sea

And no this doesn’t ryme

It’s chaotic

Zilch

Zero

Nothing

Nowhere

Zoom

Out

IT does not compute

IT does not compute

Cat can haz compute?

IT does not compute

green eyes wonder through a smoldering sky

without blinking

without fading

piercing the blue orange gradients through to darkness and pinpoints of shiny twinkles

Slow

Blink

grumble rumble rumble rumble

rumble grumble tumble bumble

stumble grumble rumble tumble

rumble humble subtle mumble

WHOOOOSSSSHHH!