MicroNeil has always been interested in the application of synthetic intelligence to real-world problems. So, when we were presented with the challenge of protecting messaging systems (and specifically email) from abuse, we applied machine learning and other AI techniques as part of the solution.

Email processing, and especially filtering, presents a number of challenges:

- The Internet is increasingly a hostile environment.

- Any systems that are exposed to the Internet must be hardened against attack.

- The value of the Internet is derived from it’s openness. This openness tends to be in conflict with protecting systems from attack. Therefore, security measures must be carefully crafted so that they offer protection from abuse without compromising desirable and appropriate operations.

- The presence of abuse and the corresponding need for sophisticated countermeasures sets up an environment that is constantly evolving and growing in complexity.

- There is disagreement on: what constitutes abuse, the design of countermeasures and safeguards, what risks are acceptable, and what tactics are appropriate.

- All of these conditions change over time.

As consequence of these circumstances any successful filtering system must be extremely efficient, flexible, and dynamic. At the same time it must respond to this complexity without becoming too complex to operate. This sounds like a perfect place to apply synthetic intelligence but in order to do that we need to use a framework that models an intelligent entity interacting with it’s environment.

The progressive evaluation model provides precisely that kind of framework while preserving both flexibility and control. This is accomplished by mapping a synthetic environment and the potential responses of an intelligent automaton (agent) onto the state map of the SMTP protocol and the message delivery process.

Each state in the message delivery process potentially represents a moment in the life of the agent where it can experience the conditions present at that moment and determine the next action it should take in response to those conditions. The default action may be to proceed to the next natural step in the protocol but under some conditions the agent might choose to do something else. It may initiate some kind of analysis to gather additional information or it might execute some other intermediate step that manipulates the underlying protocol.

The collection of steps that have been taken at any point and the potential steps that are possible from that point forward represent various “filtering strategies.” Filtering strategies can be selected and adjusted by the agent based on the changing conditions it perceives, successful patterns that it has learned, and the preferences established by administrators and users of the system.

The filtering strategies made available to the agent can be restrictive so that the system’s behavior is purely deterministic; or they can be flexible to allow the agent to learn, grow, and adapt. The constraints and parameters that are established represent the system policy and ultimately define what degrees of freedom are provided to the agent under various conditions. The agent works within these restrictions to optimize the performance of the system.

In a highly restrictive environment the agent might only be to allowed to determine which DNSBLs to check based on their speed and accuracy. Suppose there are several blacklists that are used to reject new connections. If one of these blacklists were to become slow to respond or somehow inaccurate (undesirable) then the agent might be allowed to exclude that test from the filtering strategy for a time. It might also be allowed to change the order in which the available blacklists are checked so that faster, less comprehensive blacklists are checked first. This would have the effect of reducing system loads and improving performance by rejecting many connections early in the process and applying slower tests only after the majority of connections have been eliminated.

A conservative administrator might also permit the agent to select only cached results from some blacklists that are otherwise too slow. The agent might make this choice in order to gain benefit from blacklists that would otherwise degrade the performance of the system. In this scenario the cached results from a slow but accurate blacklist would be used to evaluate each message and the blacklist would be queried out of band for any cache misses. If the agent perceived an improvement in the speed of the blacklist then it could elect to use the blacklist normally again.

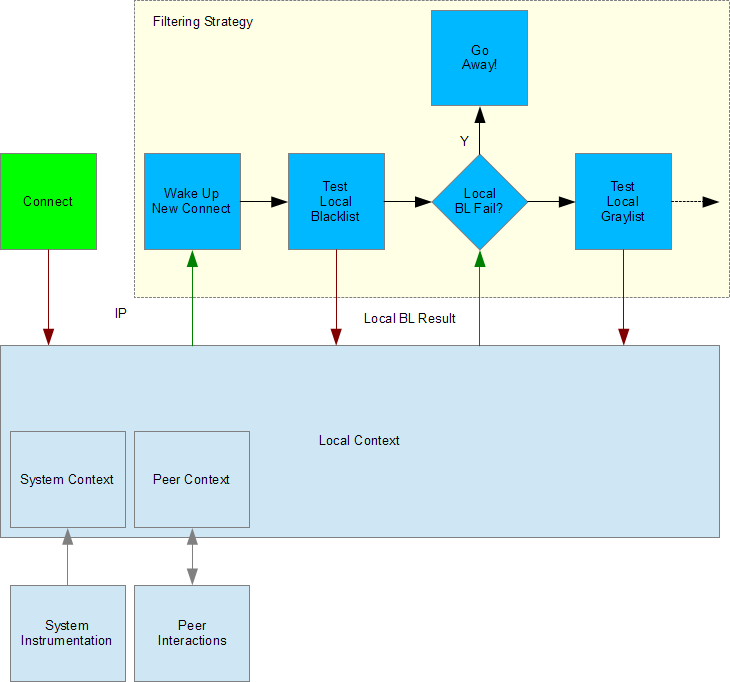

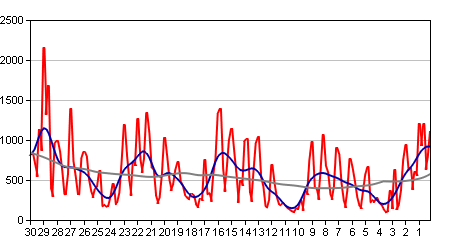

Figure 1 – Basic Progressive Response Model for Email Processing

Refer to Figure 1. Generally the agent “lives” in a sequence of events that are triggered by new information. At the most basic level it is either acting or waiting for new information to arrive. When information is added to it’s local context (red arrows) then that new information is applied (green arrows) to the current state of the agent (blue boxes).

If the new information is relevant and sufficient then it will trigger the agent to take action again and change it’s state thus moving the process forward. Each action is potentially guided by the all of the information that is available in the local context including a complete history of all previous actions.

In Figure 1, an agent waiting asleep is prompted by it’s local context to let it know that a new connection has occurred. Let’s assume that this particular system is designed so that each agent is assigned a single connection. The agent acts by waking up and changing it’s state. That action (a change in it’s own state) triggers the next action which is to issue a command to test the local blacklist with the new IP. Then, the agent changes it’s state again to a branching state where it will respond to the local blacklist result once it is available. At this point the agent goes back to a waiting state until new information arrives from the test because it is unable to continue without new information.

Next, the local blacklist result arrives in the local context. This prompts the agent again causing it to evaluate the local blacklist result. Depending upon that result it will chose one of two filtering strategies to use moving forward: either rejecting the connection or proceeding to another test.

This process continues with the agent receiving new stimuli and responding to that stimuli according to the conditions it recognizes. Each stimulus elicits a response and each response is itself a stimulus. The chain of stimuli and responses cause the agent to interact with the process following a path through the states made available to it by progressively selecting filtering strategies as it goes.

As each step is taken additional information about the session and each message builds up. Each new piece of information becomes part of the local environment for the agent and allows it to make more sophisticated choices. In addition to conventional test data the agent also builds up other information about it’s operating environment such as performance statistics about the server, other sessions that are active, partial results from it’s own calculations, and references to previous “experiences” that are “interesting” to it’s learning algorithms.

Agents might also communicate with each other to share information. This would allow these agents to from a kind of group intelligence by sharing their experiences and the performance of their filtering strategies. Each agent would gain more comprehensive access to test data and the workload of devising and evaluating new strategies would be divided among a larger and more diverse collection of systems.

The level of sophistication that is possible is limited only by the sophistication of the agent software and the restrictions imposed by system policies. This framework is also flexible enough to accommodate additional technologies as they are developed so that the costs and risks associated with future upgrades are reduced.

Typically any new technologies would appear to the agent as optional tools for new filtering strategies. Existing filtering strategies could be modified to test the qualities of the new tools before allowing them to affect the message flow. This testing might be performed deterministically by the system administrator or the agent might be allowed to adapt to the presence of the new tool and integrate it automatically once it learns how to use it through experimentation.

So far the description we have used is strictly mechanical. Even in an intelligent system it would be possible and occasionally desirable for the system administrator to specify a completely deterministic set of filtering strategies. However, on a system that is not as restrictive there are two opportunities for the intelligence of the agent to emerge.

Parametric adaptation might allow the agent to respond with some flexibility within a given filtering strategy. For example, if the local blacklist test were replaced by a local IP reputation test then the agent might have a variable threshold that it uses to judge whether the connecting IP “failed.” As a result it would be allowed to select filtering strategies based upon learning algorithms that adjust IP reputation thresholds and develop the IP reputation statistics.

Structural adaptation might allow the agent to swap out components of filtering strategies. Segments of filtering strategies might be represented in a genetic algorithm. After each session is complete the local context would contain a complete record of the strategies that were followed and the conditions that led to those strategies. Each of these sessions could be added to a pool, evaluated for fitness (out of band), and the most successful strategies could then be selected to produce a new population of strategies for trial. A more sophisticated system might even simulate new strategies using data recorded in previous sessions so that the fitness of new filtering strategies could be predicted before testing them on live messages.

Structural and parametric adaptation allow an agent to explore a wide range of strategies and tuning parameters so it can adopt strategies that produce the best performance across a range of potentially conflicting criteria. In order to balance the need for both speed and accuracy the agent might evolve a progressive filtering strategy that leverages lightweight tests early in the process in order to reduce the cost of performing more sophisticated tests later in the process. It might also improve accuracy by combining the scores of less accurate tests using various tunable weighting schemes in order to refine the results.

Another interesting adaptation might depend on session specific parameters such as the connecting system address range, HELO, and MAIL FROM: information, header structure, or even the timing and sequence of the events in the underlying protocol. Over time the agent might learn to use different strategies for messages that appear to be from banks, online services, or dynamic address ranges.

Given enough flexibility and sensitivity it could learn to recognize early clues in the message delivery process and select from dozens of highly tuned filtering strategies that are each optimized for their own class of messages. For example it might learn to recognize and distrust systems that stall on open connections, attempt to use pipelining before asking permission, or attempt to guess recipient addresses through dictionary attacks.

It might also learn to recognize that messages from particular senders always include specific features. Any messages that disagree with the expected models would be tested by filtering strategies that are more “careful” and apply additional tests.

Systems with intelligent agents have the ability to adapt automatically as operating conditions change, new tests are made available, and test qualities change over time. This ability can be extended if collections of agents are allowed to exchange some of their more successful “formulas” with each other so that all of the participating agents can learn best practices from each other. Agents that share information tend to converge on optimal solutions more quickly.

There are also potential benefits to sharing information between systems of different types. Intelligent intrusion detection systems, application servers, firewalls, and email servers could collaborate to identify attackers and harden systems against new attack vectors in real time. Specialized agents operating in client applications could further accelerate these adaptations by contributing data from the end user’s point of view.

Of course, optimizing system performance and responding to external threats are only parts of the solution. In order to be successful these systems must also be able to adapt to changing stakeholder preferences.

Consider that a large scale filtering system needs to accommodate the preferences of system administrators in charge of managing the infrastructure, group administrators in charge of managing groups of email domains and/or email users, power users who desire a high degree of control, and ordinary users who simply want the system to work reliably and automatically.

In a scenario like this various parts of the filtering strategy might be modified or swapped into place at various stages based on the combined preferences of all stakeholders.

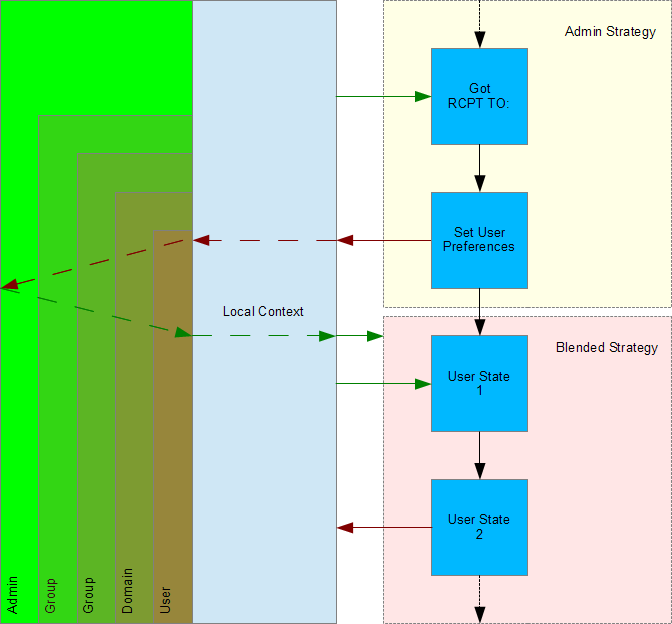

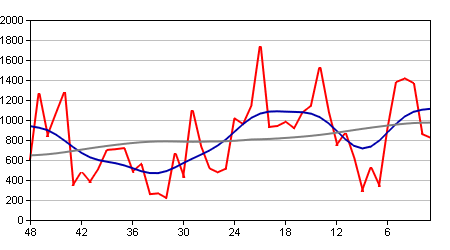

Figure 2 – Structural Adaptation Per User

At any point during the progressive evaluation process it is possible to change the remaining steps in the filtering strategy. The change might be in response to the results of a test, results from an analysis tool, a change in system performance data, or new information about the message.

In Figure 2 we show how the filtering strategy established by the administrator is followed until the first recipient is established. The first recipient is interpreted as the primary user for this message. Once the user is known the remainder of the filtering strategy is selected and adjusted based on the combined user, domain, group, and administrator preferences that apply.

Beginning with settings established by the system administrator each successively more specific layer is allowed to modify parts of the filtering strategy so that the composite that is ultimately used represents a blend of all relevant preferences. The higher, more general layers determine the default settings and establish how preferences can be modified by the lower, more specific layers.

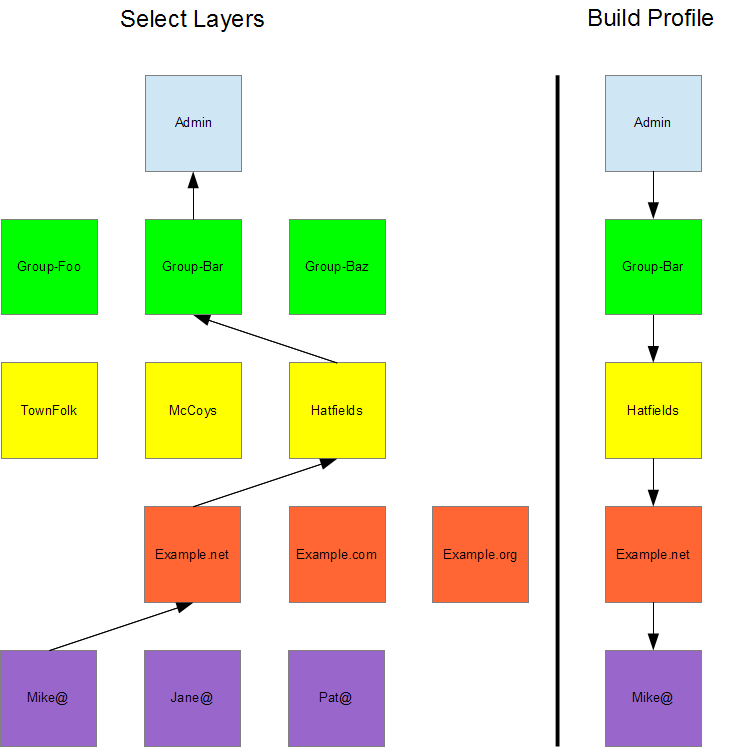

Figure 3 – Blended Profile Selection

Refer to Figure 3. The applicable layers are selected from the bottom up. The specific user belongs to a domain, a domain belongs to a group, a group may belong to another group, and all top level groups belong to the system administrator. Once a specific user (recipient) is identified then the applicable layers can be selected by following a path through the parent of each layer until the top (administrator) layer is reached. Then, the defaults set by the administrator are applied downward and modified by each layer along the same path until the user is reached again. The resulting preferences contain a blend of the preferences defined at each layer.

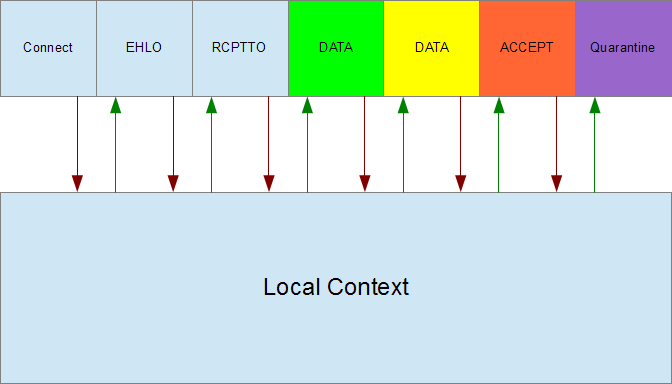

Figure 4 – Composite Strategy Interaction

It is important to note that these drawings are potentially misleading in that they may appear to show that the agent is responsible for executing the SMTP protocol and all that is implied. In practice that would not be the case. Some of the key states in the illustrated filtering strategies have been named for states in the SMTP protocol because the agent is intended to respond to those specific conditions. However the machinery of the protocol itself is managed by other parts of the software – most likely embedded in the machinery that builds and maintains the local context.

You could say that the local context provides the world where the intelligent agent lives. The local context implements an API that describes what the agent can know and how it can respond. The agent and the local context interact by passing messages to each other through this API.

Typically the local context and the agent are separate modules. The local context module contains the machinery for interacting with the real world, interpreting it’s conditions, and presenting those conditions to the agent in a form it can understand. The agent module contains the machinery for learning and adapting to the artificial world presented by the local context. Both of these modules can be developed and maintained independently as long as the API remains stable.

It should be noted that this kind of framework can be applied broadly to many kinds of systems – not just email processing and other systems on the Internet. It is possible to map synthetic intelligence like this into any system that has sufficiently structured protocols and can tolerate some inconsistency during adaptation. The protocols provide a foundation upon which an intelligent agent can “grow” it’s learning processes. A tolerance for adaptation provides a venue for intelligent experimentation and optimization to occur.

Further, the progressive evaluation model is also not limited to large-scale processes like message delivery. It can also inform the development of smaller applications and even specialized functions embedded in other programs. A lightweight implementation of this technique underpins the design of the pattern matching engine used in Message Sniffer. Unlike conventional pattern matching engines, Message Sniffer uses a swarm of lightweight intelligent agents that explore their data set collaboratively in the context of an artificial “world” that is structured to represent their collective knowledge. Each of these agents progressively evaluates it’s location in the world, it’s location in the data set, it’s own state, and the locations and states of it’s peers. This approach allows the engine to be extremely efficient and virtually immune to the number of patterns it must recognize simultaneously.

Broadly speaking, this technique can be applied to a wide range of tasks such as automated network management, data systems provisioning, process control and diagnostics, interactive help desks, intelligent data mining, logistics, robotics, flight control systems, and many others.

Of course, email processing is a natural fit for applications that implement the Progressive Evaluation Model as a way to leverage machine learning and other AI techniques. The Internet community has already demonstrated a willingness to “bend” the SMTP protocol when necessary, SMTP provides a good foundation upon which to build intelligent interactive agents, and messaging security is a complex, dynamic problem in search of strong solutions.